Model Context Protocol: the bridge between LLMs and enterprise systems

What it’s all about

What is the Model Context Protocol?

How does the Model Context Protocol work?

How can companies benefit from the Model Context Protocol?

What support does ti&m provide?

What is the Model Context Protocol?

What is the Model Context Protocol (MCP)?

The Model Context Protocol is an open standard. Via MCP, AI applications that use large language models can interact directly with other systems. These systems can be local or external; for example, CRMs, ERPs or knowledge databases. The major benefit of MCP is that there is no need for a separate interface for each system.

MCP acts as a universal language between language models and various data sources. MCP servers are provided to the language model. This enables the model to communicate with connected systems in a structured manner. The LLM “knows” when to query which data source via which MCP server. It then receives the responses in a comprehensible, structured format.

Using MCPs is an important step forward in the use of AI. MCPs enable AI applications to:

- Interact independently with the enterprise systems required for a specific task,

- Access the latest data in the required format, and

- Execute actions in peripheral systems that previously required a human agent.

How does the Model Context Protocol work?

MCP has three key components:

1. MCP client (e.g., an agent or a company-specific chatbot with a connected LLM)

The MCP client sends a request to the MCP server. For example, the client asks the server to execute a particular function or retrieve specific information. The MCP client receives the response and then provides it to the user or agent.

2. MCP server (e.g., an abstraction layer above a CRM system)

The server receives requests from the client and translates them into API calls for the target system. It then provides structured responses to the MCP client.

3. Systems, applications, and tools (e.g., Jira, Salesforce, or Open Datastack)

This is the system that actually provides the data or executes functions.

Standardized communication makes connecting new systems easy. And most importantly, it means there is no need to integrate individual interfaces for the AI application. Your collaboration tool, such as Jira, may already have an MCP server. By contrast, you may need to develop your own MCP server for specialist internal applications.

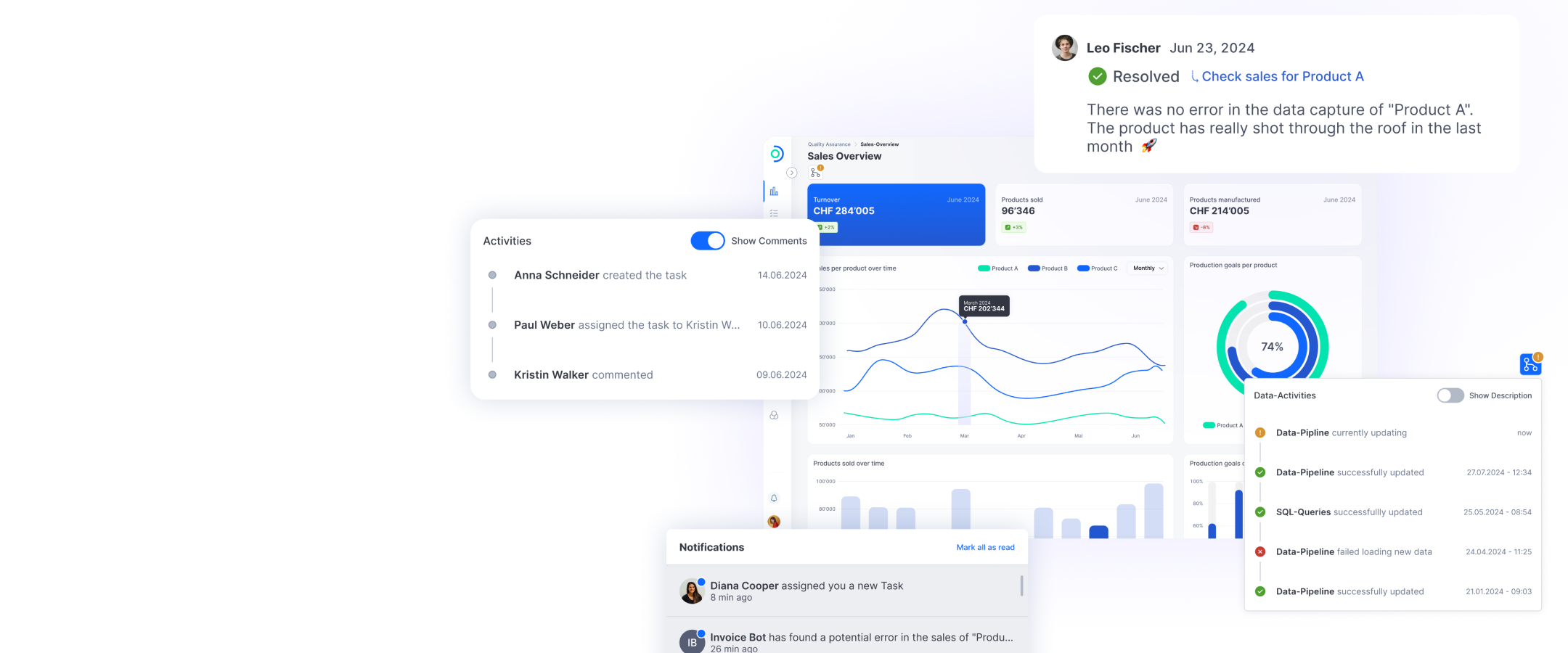

The illustration below shows possible scenarios for how an AI application can communicate with the target systems:

We want to use AI agents. How can MCP help us?

Many companies want to develop AI agents that perform tasks automatically. They aim to automate processes from ticketing through to data analysis. Since these tasks are usually carried out in different tools, the agents must be able to interact with various systems.

This is where MCP comes into play. MCP is the universal bridge between agents and tools. For example, an agent can use MCP to retrieve information from an ERP system, update it in a CRM system and enter a comment in Confluence – all via a single protocol.

How can I use MCP in my company?

MCP does not compete with established methods such as retrieval-augmented generation (RAG). Instead, it strategically complements them:

- MCP is particularly useful for dynamic, real-time data, such as current Jira tickets or live dashboard information. This is because no preindexing is required

- For static knowledge databases, RAG with preindexed data is often still more efficient

- Hybrid approaches are the ideal combination of both methods: static data via RAG, dynamic data via MCP

A typical business environment has many peripheral systems, such as:

- Core business systems

- CRM systems, such as Salesforce or Microsoft Dynamics

- ERP systems, such as Abacus or SAP

- Collaboration tools, such as Confluence or Jira

- Data warehouses

Some of these systems already have MCP servers, or MCP servers are planned. To connect other systems with their AI agents or LLM applications, companies can develop their own MCP servers.

The goal: A context-sensitive chatbot or agent that can perform actions or use the latest information from various systems. To realize this, the MCP servers act as a crucial bridge between the AI and the enterprise systems. They remove the need to index databases beforehand or integrate an individual interface into the AI system. This creates an efficient, intelligent flow of information within the company – and potentially for external customers as well.

Does ti&m already have its own MCP servers?

We have developed our own MCP server for our business intelligence platform, Open Datastack. Open Datastack is our modular open-source data platform that combines all the stages in data processing – from extraction and analysis to dashboards. Connecting AI systems to MCP enables them to interact directly with Open Datastack’s data modules. They can ask questions and even trigger actions – without any manual interventions.

How does ti&m support companies with rolling out MCP?

We help companies:

- Evaluate how MCP can benefit their existing or planned AI use cases,

- Develop their own MCP server or make existing applications compatible, and

- Efficiently connect agent systems and AI applications using LLMs to enterprise systems.

Together, we allow you to connect even more data, systems, and applications. And we make sure that you’ll be able to work even more efficiently and intelligently in the future.