The 70 % reality check: Agentic AI is live in banking – governance isn’t there yet

AI Agents // Agentic AI has moved from pilots to production. Roughly seven in ten institutions now run agents that don’t just recommend, but also act. Yet control frameworks often trail capabilities, creating exposure on auditability, accountability, and third-party concentration. The prize is real; so are the risks.

Agentic AI represents a shift from decision support to execution under mandate. In payments, the direction is visible: Mastercard is enabling Agent Pay with partners including Stripe, Google, and Antom so authenticated agents can complete purchases based on explicit customer instructions. Visa’s Intelligent Commerce points the same way – delegated shopping with spend controls and consumer final say. Google’s Agent Payments Protocol (AP2) aims to standardize how agents authenticate and pay across rails. When software initiates a transfer or places an order, the firm’s responsibilities don’t disappear; they crystallize. The operating question is no longer “Can we?” but “Can we evidence why the agent acted, on what data, within which limits, and who could stop it?”

Where value shows up today is pragmatic. In agentic commerce, mandates plus pretransaction risk scoring allow proactive blocks seconds before settlement. In advisory contexts, banks recapture time: UBS has scaled assistants to surface research and context on demand and is distributing clearly labeled AI video summaries of analyst notes for clients – with human review preserved. On the control side, Swiss institutions are expanding internal AI while hardening compliance guardrails; ZKB’s public reporting outlines a programmatic approach to governance. And at retail scale, Nubank's acquisition of Hyperplane underscores the lesson many incumbents are rediscovering: agent quality depends on data plumbing – collection, features, identity, feedback loops - more than on a single model.

What changes competitively is the fiduciary perimeter. If a client's agent can rebalance within agreed bands, contest a disputed charge, or orchestrate receivables and pay-ables, loyalty follows the institution that can deliver delegated outcomes and explain every step. Lock-in comes less from sleek UX and more from reliable mandates, audit trails, and quick human escalation when something looks off. That is why auditability should be treated as a customer feature, not just an internal control.

Regulators make it clear: Existing rules apply

Regulators are not writing a separate AI rulebook for banks; they expect existing regimes to apply. The UK financial markets regulator’s stance is clear: conduct, outsourcing, operational resilience, and model risk obligations all extend to AI systems. FINMA’s guidance points to the same foundation: maintain an AI inventory, classify risks, test and monitor, make decisions traceable, and retain human override – especially where third-party platforms are involved. For cross-border Swiss groups, the implication is straightforward: build one control fabric that satisfies both principle-based expectations and local specifics, and prove it with evidence rather than slogans.

That evidence begins with mandates, not consent. Vague permission to “help me buy” is insufficient once an agent spends money or moves assets. Banks should adopt explicit, revocable mandates that define scope (what the agent may do), limits (how far it may go), the authentication context (device, identity, risk), and the break-glass path for revocation. This is where the ecosystem is converging – AP2 and Agent Pay both assume attestable instructions and strong checks at the handoff to the rail.

Decision telemetry: Why was this action taken?

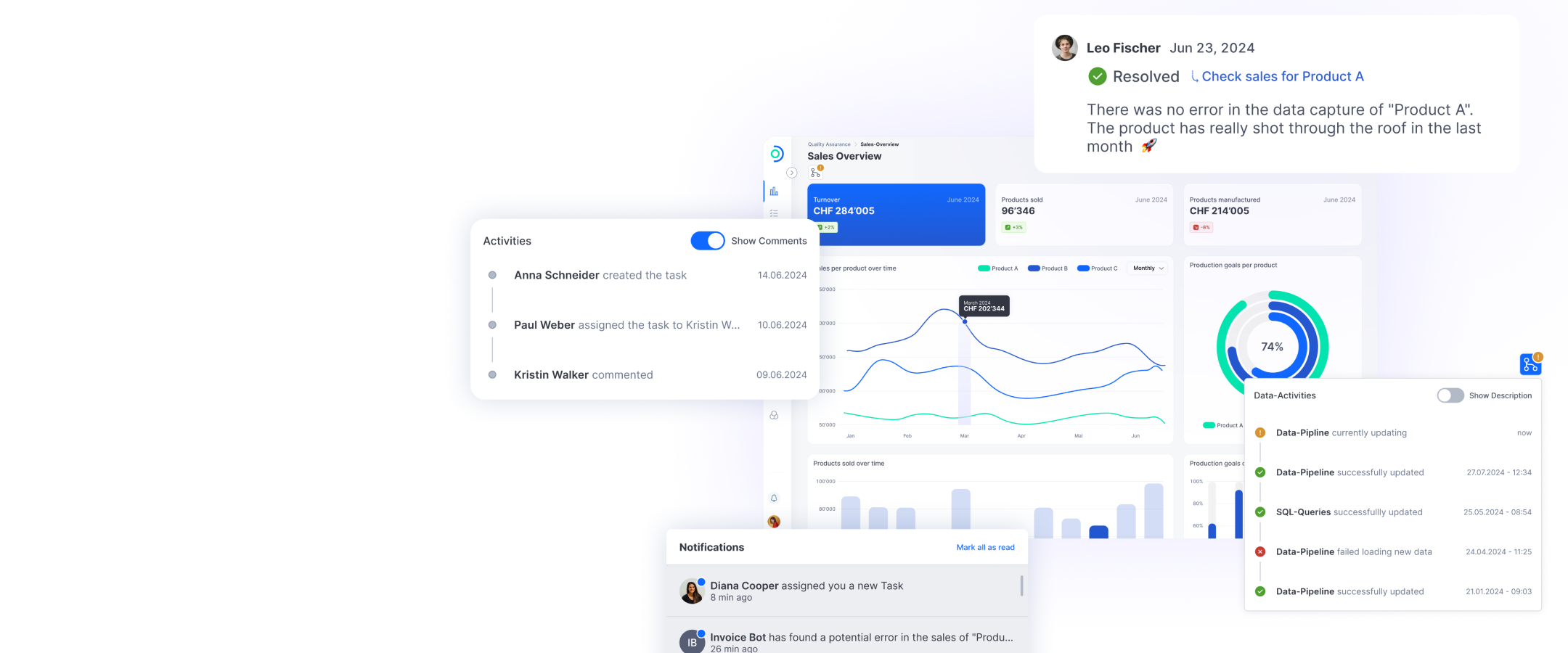

Next is decision telemetry. Treat agents as composite systems, not single models. Capture prompts and inputs, model and policy versions, guardrail hits, confidence measures, human approvals, and outcomes in immutable stores. Make “why this action?” available to frontline staff, auditors, and – when appropriate – clients. Without this, explanations become retrofits and trust degrades precisely when transparency matters.

Interfaces are the other control point. Most failures propagate at handoffs – agent to payment network, agent to trading venue, agent to CRM. Policy should execute at the boundary: block by default if limits, anomaly, jurisdiction, or confidence thresholds are breached; step up authentication; alert a human; roll back quickly. Staged deployment, shadow mode, and kill-criteria are practical habits, not theatre.

Third-party dependence deserves explicit treatment. Agent stacks lean on clouds, model providers, vector databases, orchestration layers, and payments partners. Contracts should include audit rights, incident SLAs, data-location constraints, and change notes for model updates. Where concentration risk is unavoidable, keep a minimal viable in-house fallback for essential flows

First steps for banks: Registers, mandates, telemetry

What to do now is concrete. Start with an AI Agent Register that lists each use case, data and rail touchpoints, risk class, and humanin-the-loop status. Standardize mandate templates for payments, orders, and client communications, aligned to scheme rules and Swiss contract law. Instrument decision telemetry and install boundary guardrails before scale. Pick one high-value process – card disputes, receivables scheduling, KYC refresh – run red-team scenarios (adversarial prompts, vendor outages, market stress), and make the scale/no-scale decision on measured outcomes. Report residual risks and control effectiveness to the board.

The bottom line

Agentic AI is already embedded in payment flows, financial-crime controls, and client service. The winners won’t be those that deploy the most agents, but those that bind action to mandate, make decisions explainable, and keep humans accountable. In Switzerland, the path is clear: build to FINMA’s risk-based expectations, align cross-border operations to principlebased regimes like the UK financial markets regulator’s, and make auditability visible to clients. That is how autonomous action becomes a trusted service.

ti&m Special “AI & Open Source”